Approach

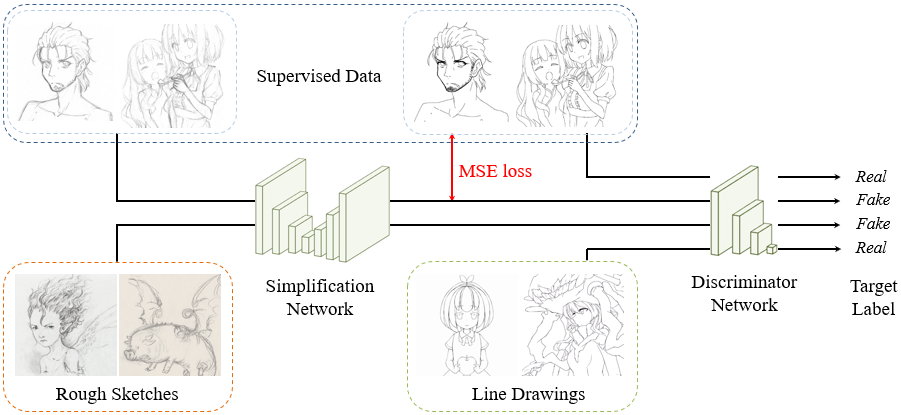

Our approach is based on using Convolutional Neural Networks to process rough sketches into clean sketch simplifications. We build upon our previous sketch simplification work and augment it by jointly training with supervised and unsupervised data by employing an auxiliary discriminator network. The discriminator network is trained to distinguish between real sketches and those generated by our sketch simplification network, while the sketch simplification network is trained to fool the discriminator network.

We train the model with data from three sources: fully supervised rough sketch-line drawing pairs, unsupervised rough sketches, and unsupervised line drawings. For the fully supervised data we employ both a supervised loss and the discriminator, while for the unsupervised rough sketches we employ only the discriminator to train the sketch simplification model. The discriminator is trained using all the line drawings as positives and the output of the sketch simplification model on all the rough sketches as negatives.

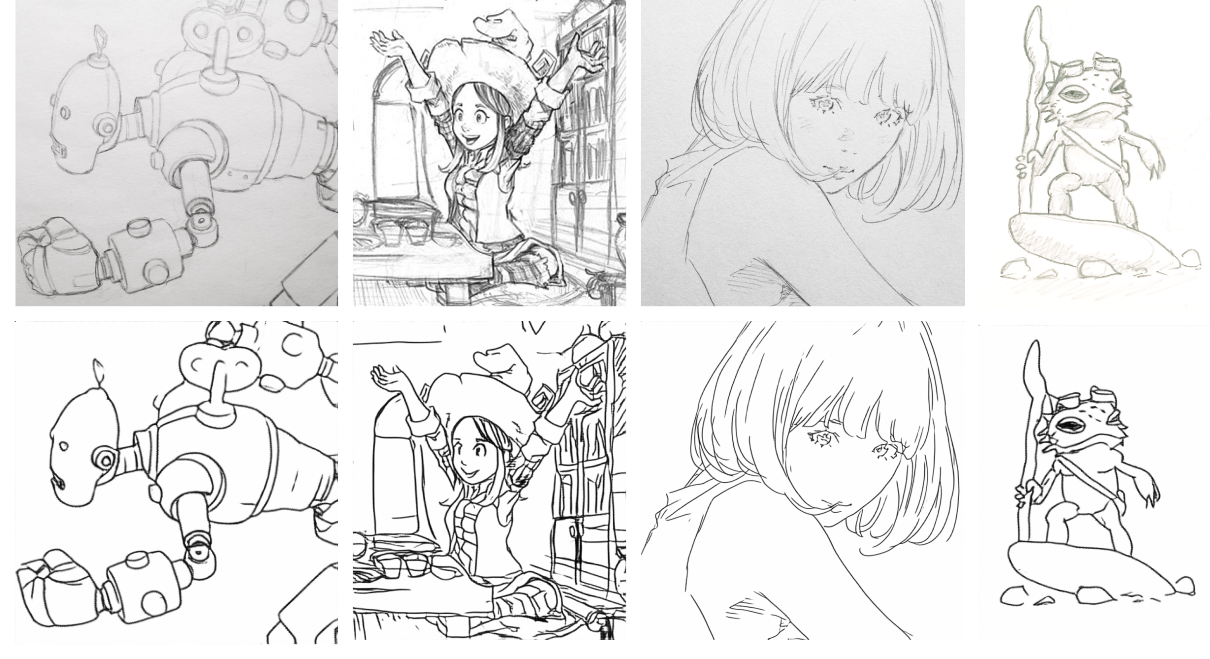

Results

We evaluate our approach on challenging real scanned rough sketches as shown above. The first and third column sketches are copyrighted by Eisaku Kubonouchi (@EISAKUSAKU) and only non-commercial research usage is allowed, while the image in the second column is copyrighted by David Revoy (www.davidrevoy.com) under CC-by 4.0.

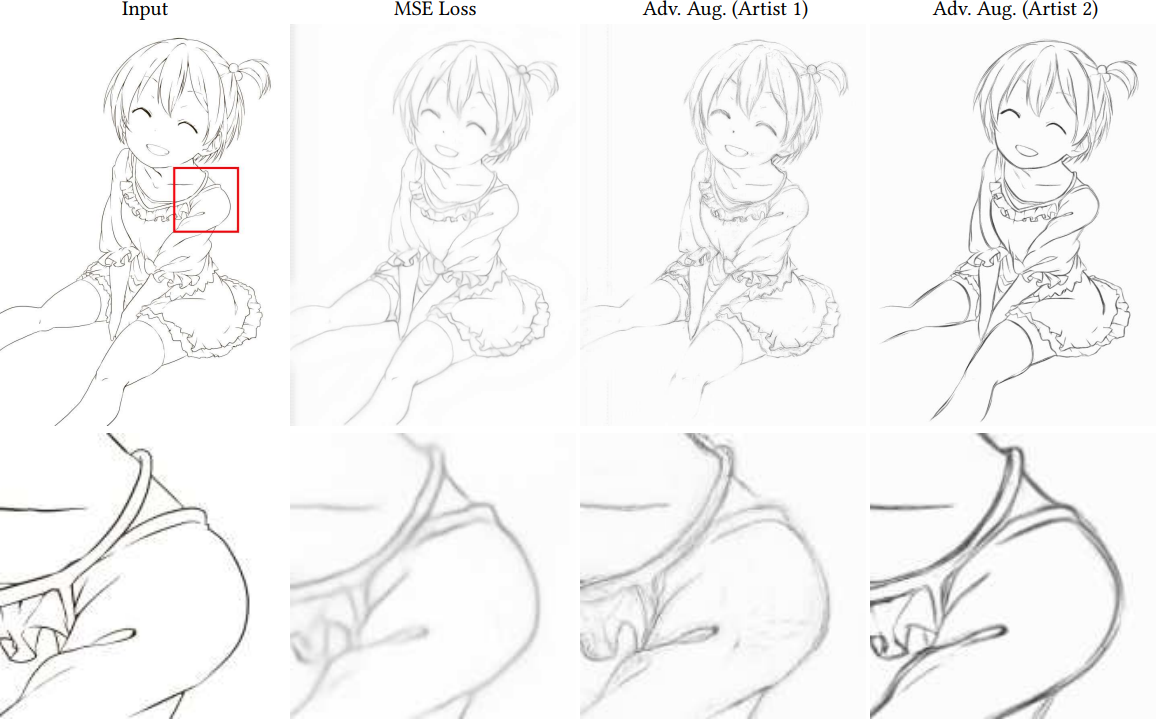

Pencil Drawing Generation

Our approach is not limited to sketch simplification and can be used for a variety of complicated tasks. We illustrate this by training our model to generate pencil drawings from clean sketch images. Examples of two different models trained on data from different artists is shown above.

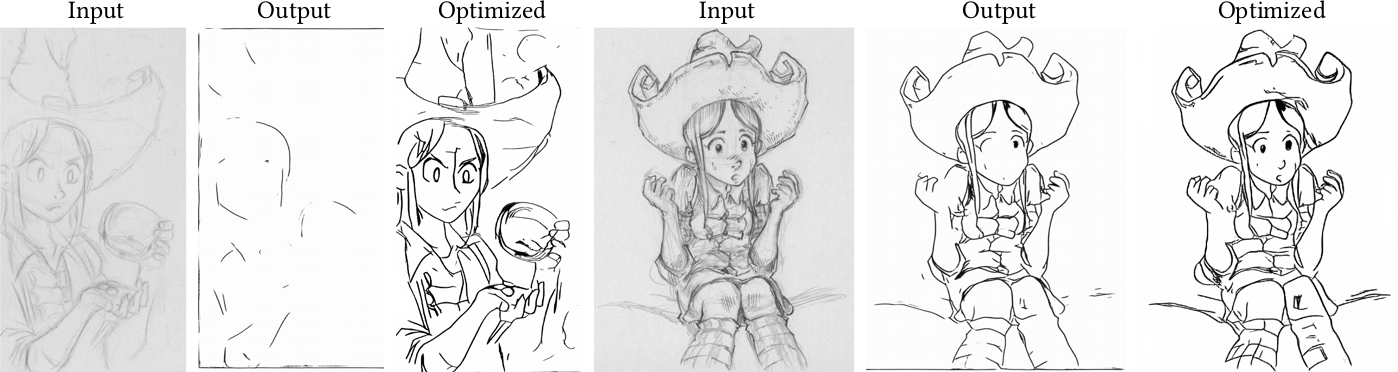

Single Image Optimization

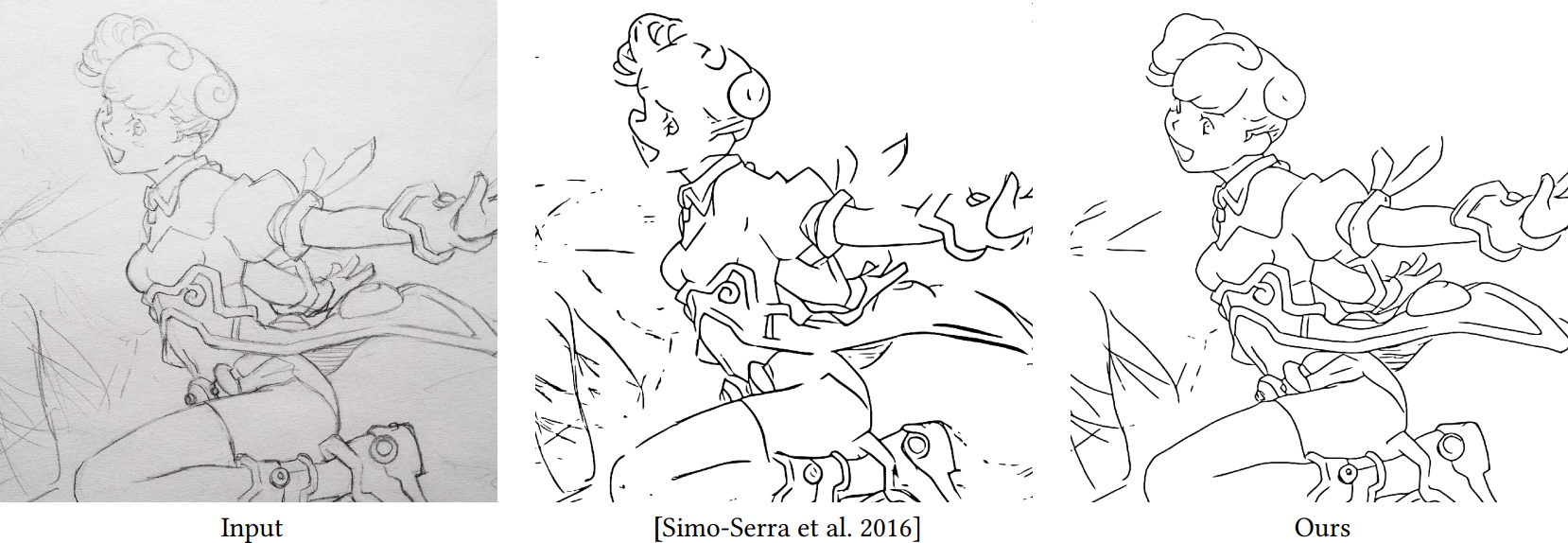

As our approach allows for the usage of unsupervised training data, it is possible to not only train models in an inductive fashion, i.e., train the models to produce good sketch simplifications for unseen data, but in a transductive fashion, i.e., optimizing the model to produce good results on the testing data. We note that this does not require ground truth annotations. Results are shown above in which initial results with the model are poor, but after optimizing it to output more realistic sketch drawings, the results become significantly better. Images copyrighted by David Revoy (www.davidrevoy.com) under CC-by 4.0.

For more details and results, please consult the full paper.

This work was partially supported by JST CREST Grant Number JPMJCR14D1, and JST ACT-I Grant Numbers JPMJPR16UD and JPMJPR16U3.