Approach

1. The inker's main purpose is to translate the penciller's graphite pencil lines into reproducible, black, ink lines.

2. The inker must honor the penciller's original intent while adjusting any obvious mistakes.

3. The inker determines the look of the finished art.

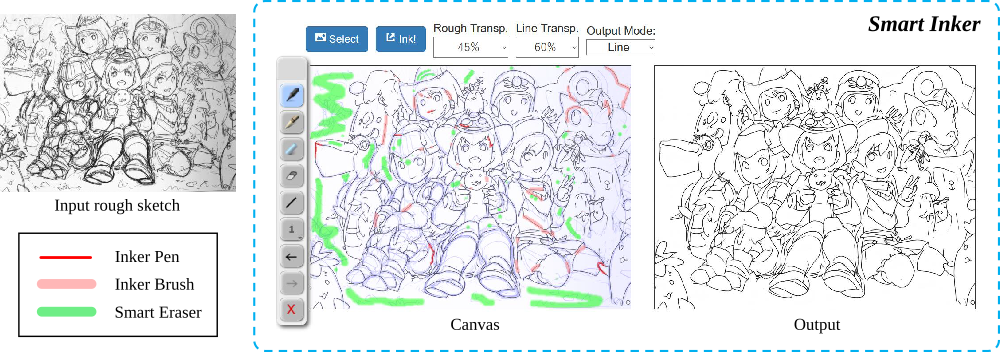

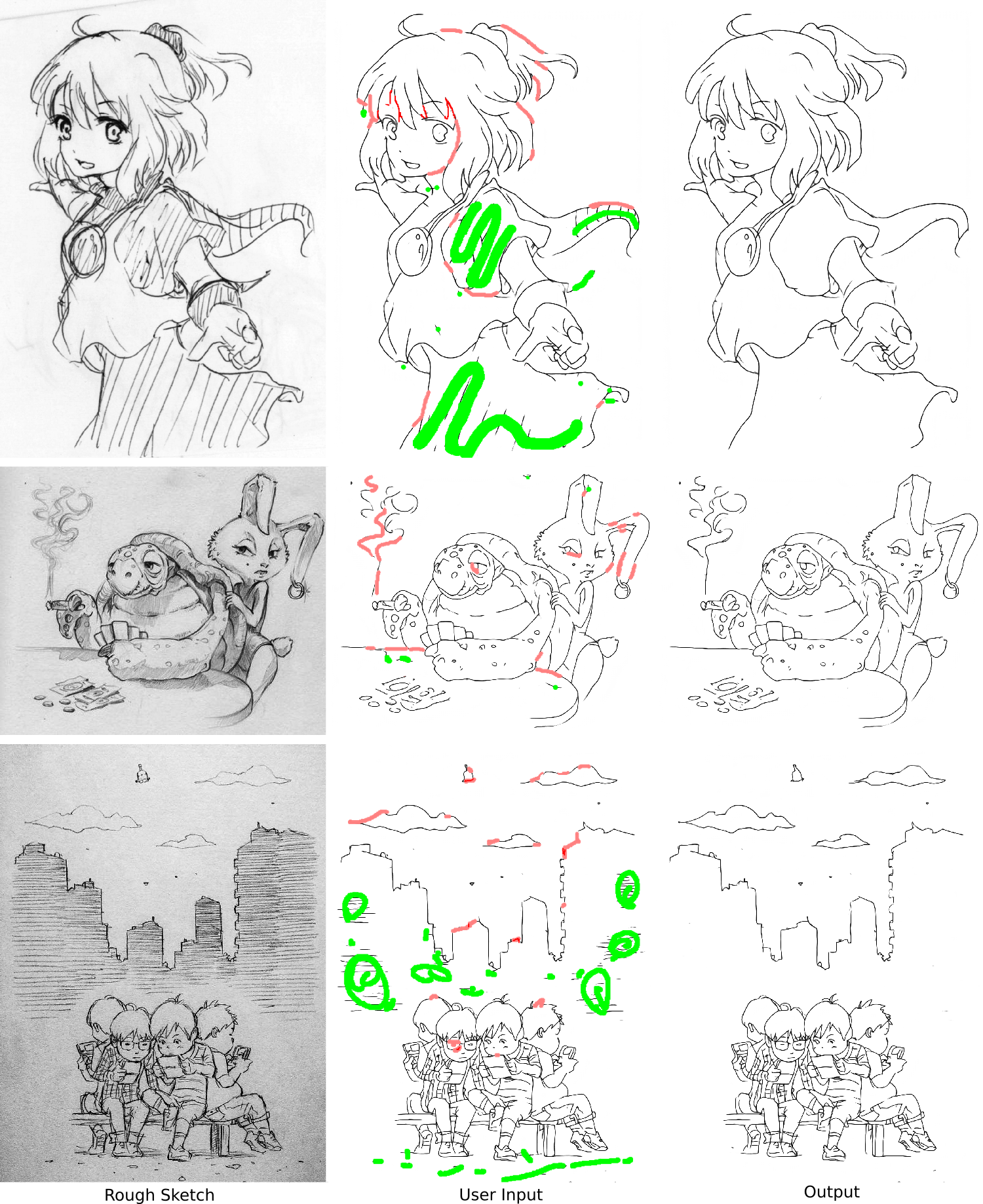

Our approach is based on using Fully Convolutional Networks to interactively ink rough sketch drawings in real-time. The interactivity is built around three tools:

- Inker Pen: Allows fine-grained control of output lines.

- Inker Brush: Can be used to quickly connect and complete strokes.

- Smart Eraser: Erases unnecessary lines while taking into account the context.

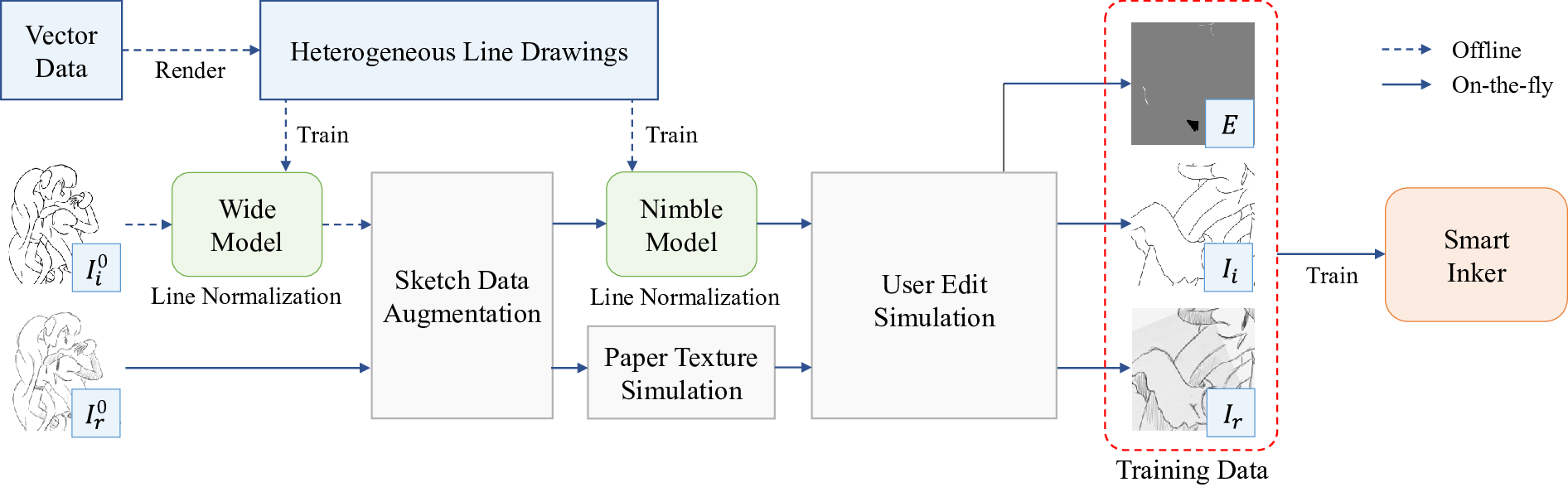

We use a data-driven approach to design the tools in which we leverage a large dataset of rough sketch and line drawing pairs. The tools are created training with simulated user edits that modify the input data.

Smart Tools

Next we describe in more detail the different tools and show several examples of each.

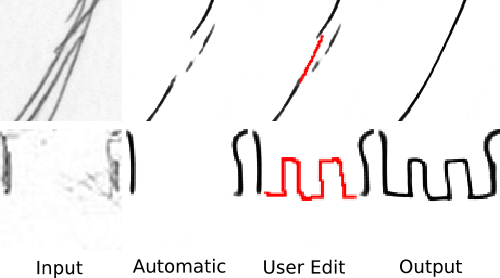

Inker Pen

The inker pen is a tool designed for accurate edits. However, unlike pen tools found in standard software, this tool still reacts to the original rough sketch and output. This allows the tool to naturally connect and complete line segments, even when the user edit is not perfect.

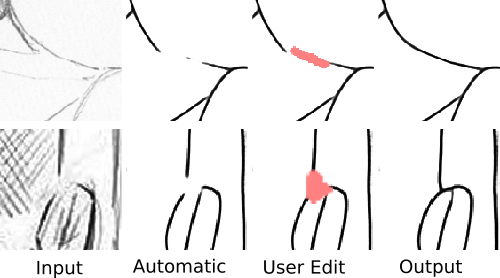

Inker Brush

The inker brush is a tool designed for fast and easy editing, by pointing out to the model line segments that have to be completed. In contrast with the inker pen, it does not allow for as accurate edits, leaving the inking to the model. In general, this tool is preferred to the inker pen, which is mainly used when accurate and detailed inking is necessary.

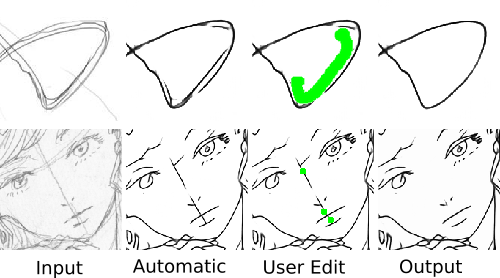

Smart Eraser

The smart eraser acts similar to a normal eraser, however, it also reacts to the rough sketch and output lines. This allows conserving important lines while erasing the unnecessary parts pointed out by the user.

Model

Our model is an encoder-decoder architecture fully convolutional network. For training the model to ink rough sketches, we have introduced two important changes into the training procedure. First of all, we normalize the training line drawings by using auxiliar neural networks, trained on vector line drawing data. This allows producing clean outputs without post-processing nor complicated loss functions, and is essential for performance. Second, we simulate the user edits in a realistic manner, which allows creating the different tools used by our model. This simulation is not limited to simulating the user edits, but it also manipulates the input training data, by adding noise to be erased or erasing lines to be completed following the simulated user edits.

Results

We evaluate our approach on challenging real scanned rough sketches as shown above. The image in the second row is copyrighted by David Revoy (www.davidrevoy.com) under CC-by 4.0, while the third row rough sketch is copyrighted by Eisaku Kubonouchi (@EISAKUSAKU) and only non-commercial research usage is allowed.

For more details and results, please consult the full paper.

This work was partially supported by JST CREST Grant Number JPMJCR14D1, and JST ACT-I Grant Numbers JPMJPR16UD and JPMJPR16U3.