2025

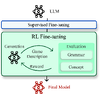

Game Description Generation (GDG) is the task of generating a game description

written in a Game Description Language (GDL) from natural language text. Previous

studies have explored generation methods leveraging the contextual understanding

capabilities of Large Language Models (LLMs); however, accurately reproducing the

game features of the game descriptions remains a challenge. In this paper, we

propose reinforcement learning-based fine-tuning of LLMs for GDG (RLGDG). Our

training method simultaneously improves grammatical correctness and fidelity to

game concepts by introducing both grammar rewards and concept rewards. Furthermore,

we adopt a two-stage training strategy where Reinforcement Learning (RL) is applied

following Supervised Fine-Tuning (SFT). Experimental results demonstrate that our

proposed method significantly outperforms baseline methods using SFT alone.

@InProceedings{TanakaCOG2025,

author = {Tsunehiko Tanaka and Edgar Simo-Serra},

title = {{Grammar and Gameplay-aligned RL for Game Description Generation with LLMs}},

booktitle = "Proceedings of the Conference on Games (CoG)",

year = 2025,

}

We propose a neural screen-space refraction baking method for global illumination

rendering, with applicability to real-time 3D games. Existing neural global

illumination rendering methods often struggle with refractive objects due to the

lack of texture information in G-Buffers. While some existing approaches extend

neural global illumination to refractive objects by predicting texture maps (UV

maps), they are limited to objects with simple geometry and UV maps. In contrast,

our method bakes refracted textures without these assumptions by directly encoding

the world coordinates of refracted objects into the neural network instead of UV

coordinates. Our experiments demonstrate that our approach performs better on

refraction rendering than previous methods. Additionally, we investigate the

differences in neural network performance when baking coordinates in different

spaces, such as world space, screen space, and UV space, showing the best results

yielded by baking in world-space coordinates.

@InProceedings{ZiyangCVPRW2023,

author = {Ziyang Zhang and Edgar Simo-Serra},

title = {{G-Buffer Supported Neural Screen-space Refraction Baking for Real-Time Global Illumination}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2025,

}

Traditional approaches in offline reinforcement learning aim to learn the optimal

policy that maximizes the cumulative reward, also known as return. It is

increasingly important to adjust the performance of AI agents to meet human

requirements, for example, in applications like video games and education tools.

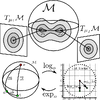

Decision Transformer (DT) optimizes a policy that generates actions conditioned on

the target return through supervised learning and includes a mechanism to control

the agent's performance using the target return. However, the action generation is

hardly influenced by the target return because DT’s self-attention allocates scarce

attention scores to the return tokens. In this paper, we propose Return-Aligned

Decision Transformer (RADT), designed to more effectively align the actual return

with the target return. RADT leverages features extracted by paying attention

solely to the return, enabling action generation to consistently depend on the

target return. Extensive experiments show that RADT significantly reduces the

discrepancies between the actual return and the target return compared to DT-based

methods.

@Article{TanakaTMLR2024,

author = {Tsunehiko Tanaka and Kenshi Abe and Kaito Ariu and Tetsuro Morimura and Edgar Simo-Serra},

title = {{Return-Aligned Decision Transformer}},

journal = "Transactions on Machine Learning Research",

year = 2025,

volume = 1,

number = 1,

}

2024

Game Description Language (GDL) provides a standardized way to express diverse

games in a machine-readable format, enabling automated game simulation, and

evaluation. While previous research has explored game description generation using

search-based methods, generating GDL descriptions from natural language remains a

challenging task. This paper presents a novel framework that leverages Large

Language Models (LLMs) to generate grammatically accurate game descriptions from

natural language. Our approach consists of two stages: first, we gradually generate

a minimal grammar based on GDL specifications; second, we iteratively improve the

game description through grammar-guided generation. Our framework employs a

specialized parser that identifies valid subsequences and candidate symbols from

LLM responses, enabling gradual refinement of the output to ensure grammatical

correctness. Experimental results demonstrate that our iterative improvement

approach significantly outperforms baseline methods that directly use LLM outputs.

@Article{TanakaTOG2024,

author = {Tsunehiko Tanaka and Edgar Simo-Serra},

title = {{Grammar-based Game Description Generation using Large Language Models}},

journal = "Transactions on Games",

year = 2024,

volume = 1,

number = 1,

}

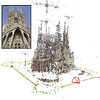

Neural rendering bakes global illumination and other computationally costly effects

into the weights of a neural network, allowing to efficiently synthesize

photorealistic images without relying on path tracing. In neural rendering

approaches, G-buffers obtained from rasterization through direct rendering provide

information regarding the scene such as position, normal, and textures to the

neural network, achieving accurate and stable rendering quality in real-time.

However, due to the use of G-buffers, existing methods struggle to accurately

render transparency and refraction effects, as G-buffers do not capture any ray

information from multiple light ray bounces. This limitation results in blurriness,

distortions, and loss of detail in rendered images that contain transparency and

refraction, and is particularly notable in scenes with refracted objects that have

high-frequency textures. In this work, we propose a neural network architecture to

encode critical rendering information, including texture coordinates from refracted

rays, and enable reconstruction of high-frequency textures in areas with

refraction. Our approach is able to achieves accurate refraction rendering in

challenging scenes with a diversity of overlapping transparent objects.

Experimental results demonstrate that our method can interactively render high

quality refraction effects with global illumination, unlike existing neural

rendering approaches.

@Article{ZiyangPG2024,

author = {Ziyang Zhang and Edgar Simo-Serra},

title = {{CrystalNet: Texture-Aware Neural Refraction Baking for Global Illumination}},

journal = {Computer Graphics Forum (Pacific Graphics)},

year = 2024,

}

Neural Global Illumination Baking with Neural Texture Mapping on

Refractive Objects

Ziyang Zhang, Edgar Simo-Serra

Visual Computing (ポスター), 2024

Hint-AUC: Deterministic Region-based Hint Generation for Line Art

Colorization Evaluation

Madono Koki, Yuan Mingcheng, Edgar Simo-Serra

Visual Computing (ポスター), 2024

Deep Sketch Vectorization via Implicit Surface Extraction

Chuan Yan, Yong Li, Deepali Aneja, Matthew Fisher, Edgar Simo-Serra, Yotam

Gingold

ACM Transactions on Graphics (SIGGRAPH), 2024

We introduce an algorithm for sketch vectorization with state-of-the-art accuracy

and capable of handling complex sketches. We approach sketch vectorization as a

surface extraction task from an unsigned distance field, which is implemented using

a two-stage neural network and a dual contouring domain post processing algorithm.

This word is normally spelled with a hyphen. The first stage consists of extracting

unsigned distance fields from an input raster image. The second stage consists of

an improved neural dual contouring network more robust to noisy input and more

sensitive to line geometry. To address the issue of under-sampling inherent in

grid-based surface extraction approaches, we explicitly predict undersampling and

keypoint maps. These are used in our post-processing algorithm to resolve sharp

features and multi-way junctions. The keypoint and undersampling maps are naturally

controllable, which we demonstrate in an interactive topology refinement interface.

Our proposed approach produces far more accurate vectorizations on complex input

than previous approaches with efficient running time.

@Article{ChuanSIGGRAPH2024,

author = {Chuan Yan and Yong Li and Deepali Aneja and Matthew Fisher and Edgar Simo-Serra and Yotam Gingold},

title = {{Deep Sketch Vectorization via Implicit Surface Extraction}},

journal = "ACM Transactions on Graphics (SIGGRAPH)",

year = 2024,

volume = 43,

number = 4,

}

Object-Oriented Embeddings for Offline RL using Unsupervised Object

Detection

Tsunehiko Tanaka, Edgar Simo-Serra

画像の認識・理解シンポジウム(MIRU), 2024

Multimodal Markup Language Models for Graphic Design Completion

Kotaro Kikuchi, Naoto Inoue, Mayu Otani, Edgar Simo-Serra, Kota Yamaguchi

画像の認識・理解シンポジウム(MIRU), 2024

Neural rendering provides a fundamentally new way to render photorealistic images.

Similar to traditional light-baking methods, neural rendering utilizes neural

networks to bake representations of scenes, materials, and lights into latent

vectors learned from path-tracing ground truths. However, existing neural rendering

algorithms typically use G-buffers to provide position, normal, and texture

information of scenes, which are prone to occlusion by transparent surfaces,

leading to distortions and loss of detail in the rendered images. To address this

limitation, we propose a novel neural rendering pipeline that accurately renders

the scene behind transparent surfaces with global illumination and variable scenes.

Our method separates the G-buffers of opaque and transparent objects, retaining

G-buffer information behind transparent objects. Additionally, to render the

transparent objects with permutation invariance, we designed a new

permutation-invariant neural blending function. We integrate our algorithm into an

efficient custom renderer to achieve real-time performance. Our results show that

our method is capable of rendering photorealistic images with variable scenes and

viewpoints, accurately capturing complex transparent structures along with global

illumination. Our renderer can achieve real-time performance (256x256 at 63 FPS and

512x512 at 32 FPS) on scenes with multiple variable transparent objects.

@Article{ZiyangCVM2024,

author = {Ziyang Zhang and Edgar Simo-Serra},

title = {{Deep Sketch Vectorization via Implicit Surface Extraction}},

journal = "Computational Visual Media",

year = 2024,

volume = "??",

number = "??",

}

There exists a large number of old films that have not only artistic value but also

historical significance. However, due to the degradation of analogue medium over

time, old films often suffer from various deteriorations that make it difficult to

restore them with existing approaches. In this work, we proposed a novel framework

called Recursive Recurrent Transformer Network (RRTN) which is specifically

designed for restoring degraded old films. Our approach introduces several key

advancements, including a more accurate film noise mask estimation method, the

utilization of second-order grid propagation and flow-guided deformable alignment,

and the incorporation of a recursive structure to further improve the removal of

challenging film noise. Through qualitative and quantitative evaluations, our

approach demonstrates superior performance compared to existing approaches,

effectively improving the restoration for difficult film noises that cannot be

perfectly handled by existing approaches.

@InProceedings{LinWACV2024,

author = {Shan Lin and Edgar Simo-Serra},

title = {{Restoring Degraded Old Films with Recursive Recurrent Transformer Networks}},

booktitle = "Proceedings of the Winter Conference on Applications of Computer Vision (WACV)",

year = 2024,

}

2023

We present a novel framework for rectifying occlusions and distortions in degraded

texture samples from natural images. Traditional texture synthesis approaches focus

on generating textures from pristine samples, which necessitate meticulous

preparation by humans and are often unattainable in most natural images. These

challenges stem from the frequent occlusions and distortions of texture samples in

natural images due to obstructions and variations in object surface geometry. To

address these issues, we propose a framework that synthesizes holistic textures

from degraded samples in natural images, extending the applicability of

exemplar-based texture synthesis techniques. Our framework utilizes a conditional

Latent Diffusion Model (LDM) with a novel occlusion-aware latent transformer. This

latent transformer not only effectively encodes texture features from

partially-observed samples necessary for the generation process of the LDM, but

also explicitly captures long-range dependencies in samples with large occlusions.

To train our model, we introduce a method for generating synthetic data by applying

geometric transformations and free-form mask generation to clean textures.

Experimental results demonstrate that our framework significantly outperforms

existing methods both quantitatively and quantitatively. Furthermore, we conduct

comprehensive ablation studies to validate the different components of our proposed

framework. Results are corroborated by a perceptual user study which highlights the

efficiency of our proposed approach.

@Inproceedings{HaoSIGGRAPHASIA2023,

author = {Guoqing Hao and Satoshi Iizuka and Kensho Hara and Edgar Simo-Serra and Hirokatsu Kataoka and Kazuhiro Fukui},

title = {{Diffusion-based Holistic Texture Rectification and Synthesis}},

booktitle = "ACM SIGGRAPH Asia 2023 Conference Papers",

year = 2023,

}

Although digital painting has advanced much in recent years, there is still a

significant divide between physically drawn paintings and purely digitally drawn

paintings. These differences arise due to the physical interactions between the

brush, ink, and paper, which are hard to emulate in the digital domain. Most ink

painting approaches have focused on either using heuristics or physical simulation

to attempt to bridge the gap between digital and analog, however, these approaches

are still unable to capture the diversity of painting effects, such as ink fading

or blotting, found in the real world. In this work, we propose a data-driven

approach to generate ink paintings based on a semi-automatically collected

high-quality real-world ink painting dataset. We use a multi-camera robot-based

setup to automatically create a diversity of ink paintings, which allows for

capturing the entire process in high resolution, including capturing detailed brush

motions and drawing results. To ensure high-quality capture of the painting

process, we calibrate the setup and perform occlusion-aware blending to capture all

the strokes in high resolution in a robust and efficient way. Using our new

dataset, we propose a recursive deep learning-based model to reproduce the ink

paintings stroke by stroke while capturing complex ink painting effects such as

bleeding and mixing. Our results corroborate the fidelity of the proposed approach

to real hand-drawn ink paintings in comparison with existing approaches. We hope

the availability of our dataset will encourage new research on digital realistic

ink painting techniques.

@Article{MadonoPG2023,

author = {Koki Madono and Edgar Simo-Serra},

title = {{Data-Driven Ink Painting Brushstroke Rendering}},

journal = {Computer Graphics Forum (Pacific Graphics)},

year = 2023,

}

Neural Global Illumination for Permutation Invariant

Transparency

Ziyang Zhang, Edgar Simo-Serra

Visual Computing (ショートオーラル), 2023

Recursive Recurrent Transformer Network for Degraded Film

Restoration

Shan Lin, Edgar Simo-Serra

Visual Computing (ショートオーラル), 2023

Diffusion-based Holistic Texture Rectification and Synthesis

Guoqing Hao, Satoshi Iizuka, Kensho Hara, Edgar Simo-Serra, Hirokatsu Kataoka,

Kazuhiro Fukui

Visual Computing (ロングオーラル), 2023

Leveraging Object Detectors for Online Action Detection

Tsunehiko Tanaka, Edgar Simo-Serra

画像の認識・理解シンポジウム(MIRU), 2023

順伝播型ニューラルネットワーク によるブラシスタイル変換

木幡 咲希, シモセラ エドガー

画像の認識・理解シンポジウム(MIRU), 2023

ガウス過程回帰と局所画像特徴量を用いた産業用X線CT画像からの亀裂抽出

石井 裕大, 下茂 道人, シモセラ エドガー

画像の認識・理解シンポジウム(MIRU), 2023

This study introduces a novel methodology for generating levels in the iconic video

game Super Mario Bros. using a diffusion model based on a UNet architecture. The

model is trained on existing levels, represented as a categorical distribution, to

accurately capture the game’s fundamental mechanics and design principles. The

proposed approach demonstrates notable success in producing high-quality and

diverse levels, with a significant proportion being playable by an artificial

agent. This research emphasizes the potential of diffusion models as an efficient

tool for procedural content generation and highlights their potential impact on the

development of new video games and the enhancement of existing games through

generated content.

@InProceedings{LeeMVA2023,

author = {Hyeon Joon Lee and Edgar Simo-Serra},

title = {{Using Unconditional Diffusion Models in Level Generation for Super Mario Bros.}},

booktitle = "International Conference on Machine Vision Applications (MVA)",

year = 2023,

}

Towards Flexible Multi-modal Document Models

Naoto Inoue, Kotaro Kikuchi, Edgar Simo-Serra, Mayu Otani, Kota Yamaguchi

Conference in Computer Vision and Pattern Recognition

(CVPR), 2023

Creative workflows for generating graphical documents involve complex inter-related

tasks, such as aligning elements, choosing appropriate fonts, or employing

aesthetically harmonious colors. In this work, we attempt at building a holistic

model that can jointly solve many different design tasks. Our model, which we

denote by FlexDM, treats vector graphic documents as a set of multi-modal elements,

and learns to predict masked fields such as element type, position, styling

attributes, image, or text, using a unified architecture. Through the use of

explicit multi-task learning and in-domain pre-training, our model can better

capture the multi-modal relationships among the different document fields.

Experimental results corroborate that our single FlexDM is able to successfully

solve a multitude of different design tasks, while achieving performance that is

competitive with task-specific and costly baselines.

@InProceedings{InoueCVPR2023b,

title = {{Towards Flexible Multi-modal Document Models}},

author = {Naoto Inoue and Kotaro Kikuchi and Edgar Simo-Serra and Mayu Otani and Kota Yamaguchi},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR)",

year = 2023,

}

LayoutDM: Discrete Diffusion Model for Controllable Layout

Generation

Naoto Inoue, Kotaro Kikuchi, Edgar Simo-Serra, Mayu Otani, Kota Yamaguchi

Conference in Computer Vision and Pattern Recognition

(CVPR), 2023

Controllable layout generation aims at synthesizing plausible arrangement of

element bounding boxes with optional constraints, such as type or position of a

specific element. In this work, we try to solve a broad range of layout generation

tasks in a single model that is based on discrete state-space diffusion models. Our

model, named LayoutDM, naturally handles the structured layout data in the discrete

representation and learns to progressively infer a noiseless layout from the

initial input, where we model the layout corruption process by modality-wise

discrete diffusion. For conditional generation, we propose to inject layout

constraints in the form of masking or logit adjustment during inference. We show in

the experiments that our LayoutDM successfully generates high-quality layouts and

outperforms both task-specific and task-agnostic baselines on several layout tasks.

@InProceedings{InoueCVPR2023a,

title = {{LayoutDM: Discrete Diffusion Model for Controllable Layout Generation}},

author = {Naoto Inoue and Kotaro Kikuchi and Edgar Simo-Serra and Mayu Otani and Kota Yamaguchi},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR)",

year = 2023,

}

Colorization of line art drawings is an important task in illustration and

animation workflows. However, this highly laborious process is mainly done

manually, limiting the creative productivity. This paper presents a novel

interactive approach for line art colorization using conditional Diffusion

Probabilistic Models (DPMs). In our proposed approach, the user provides initial

color strokes for colorizing the line art. The strokes are then integrated into the

conditional DPM-based colorization process by means of a coupled implicit and

explicit conditioning strategy to generates diverse and high-quality colorized

images. We evaluate our proposal and show it outperforms existing state-of-the-art

approaches using the FID, LPIPS and SSIM metrics.

@InProceedings{CarrilloCVPRW2023,

author = {Hernan Carrillo and Micha\"el Cl/'ement and Aur\'elie Bugeau and Edgar Simo-Serra},

title = {{Diffusart: Enhancing Line Art Colorization with Conditional Diffusion Models}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2023,

}

Controllable Multi-domain Semantic Artwork Synthesis

Yuantian Huang, Satoshi Iizuka, Edgar Simo-Serra, Kazuhiro Fukui

Computational Visual Media, 2023

We present a novel framework for multi-domain synthesis of artwork from semantic

layouts. One of the main limitations of this challenging task is the lack of

publicly available segmentation datasets for art synthesis. To address this

problem, we propose a dataset, which we call ArtSem, that contains 40,000 images of

artwork from 4 different domains with their corresponding semantic label maps. We

generate the dataset by first extracting semantic maps from landscape photography

and then propose a conditional Generative Adversarial Network (GAN)-based approach

to generate high-quality artwork from the semantic maps without necessitating

paired training data. Furthermore, we propose an artwork synthesis model that uses

domain-dependent variational encoders for high-quality multi-domain synthesis. The

model is improved and complemented with a simple but effective normalization

method, based on normalizing both the semantic and style jointly, which we call

Spatially STyle-Adaptive Normalization (SSTAN). In contrast to previous methods

that only take semantic layout as input, our model is able to learn a joint

representation of both style and semantic information, which leads to better

generation quality for synthesizing artistic images. Results indicate that our

model learns to separate the domains in the latent space, and thus, by identifying

the hyperplanes that separate the different domains, we can also perform

fine-grained control of the synthesized artwork. By combining our proposed dataset

and approach, we are able to generate user-controllable artwork that is of higher

quality than existing approaches, as corroborated by both quantitative metrics and

a user study.

@Article{HuangCVM2023,

title = {{Controllable Multi-domain Semantic Artwork Synthesis}},

author = {Yuantian Huang and Satoshi Iizuka and Edgar Simo-Serra and Kazuhiro Fukui},

journal = "Computational Visual Media",

year = 2023,

volume = 39,

number = 2,

}

Color is a critical design factor for web pages, affecting important factors such

as viewer emotions and the overall trust and satisfaction of a website. Effective

coloring re- quires design knowledge and expertise, but if this process could be

automated through data-driven modeling, efficient exploration and alternative

workflows would be possible. However, this direction remains underexplored due to

the lack of a formalization of the web page colorization prob- lem, datasets, and

evaluation protocols. In this work, we propose a new dataset consisting of

e-commerce mobile web pages in a tractable format, which are created by simplify-

ing the pages and extracting canonical color styles with a common web browser. The

web page colorization problem is then formalized as a task of estimating plausible

color styles for a given web page content with a given hierarchical structure of

the elements. We present several Transformer- based methods that are adapted to

this task by prepending structural message passing to capture hierarchical

relation- ships between elements. Experimental results, including a quantitative

evaluation designed for this task, demonstrate the advantages of our methods over

statistical and image colorization methods.

@InProceedings{KikuchiWACV2023,

author = {Kotaro Kikuchi and Naoto Inoue and Mayo Otani and Edgar Simo-Serra and Kota Yamaguchi},

title = {{Generative Colorization of Structured Mobile Web Pages}},

booktitle = "Proceedings of the Winter Conference on Applications of Computer Vision (WACV)",

year = 2023,

}

2022

Image Synthesis-based Late Stage Cancer Augmentation and Semi-Supervised

Segmentation for MRI Rectal Cancer Staging

Saeko Sasuga, Akira Kudo, Yoshiro Kitamura, Satoshi Iizuka, Edgar Simo-Serra,

Atsushi Hamabe, Masayuki Ishii, Ichiro Takemasa

International Conference on Medical Image Computing and Computer Assisted

Intervention Workshops (MICCAIW), 2022

Rectal cancer is one of the most common diseases and a major cause of mortality.

For deciding rectal cancer treatment plans, T- staging is important. However,

evaluating the index from preoperative MRI images requires high radiologists’ skill

and experience. Therefore, the aim of this study is to segment the mesorectum,

rectum, and rectal cancer region so that the system can predict T-stage from

segmentation results. Generally, shortage of large and diverse dataset and high

quality an- notation are known to be the bottlenecks in computer aided diagnos-

tics development. Regarding rectal cancer, advanced cancer images are very rare,

and per-pixel annotation requires high radiologists’ skill and time. Therefore, it

is not feasible to collect comprehensive disease pat- terns in a training dataset.

To tackle this, we propose two kinds of ap- proaches of image synthesis-based late

stage cancer augmentation and semi-supervised learning which is designed for

T-stage prediction. In the image synthesis data augmentation approach, we generated

advanced cancer images from labels. The real cancer labels were deformed to re-

semble advanced cancer labels by artificial cancer progress simulation. Next, we

introduce a T-staging loss which enables us to train segmen- tation models from

per-image T-stage labels. The loss works to keep inclusion/invasion relationships

between rectum and cancer region con- sistent to the ground truth T-stage. The

verification tests show that the proposed method obtains the best sensitivity

(0.76) and specificity (0.80) in distinguishing between over T3 stage and underT2.

In the ab- lation studies, our semi-supervised learning approach with the T-staging

loss improved specificity by 0.13. Adding the image synthesis-based data

augmentation improved the DICE score of invasion cancer area by 0.08 from baseline.

We expect that this rectal cancer staging AI can help doctors to diagnose cancer

staging accurately.

@InProceedings{SasugaMICCAIW2022,

author = {Saeko Sasuga and Akira Kudo and Yoshiro Kitamura and Satoshi Iizuka and Edgar Simo-Serra and Atsushi Hamabe and Masayuki Ishii and Ichiro Takemasa},

title = {{Image Synthesis-based Late Stage Cancer Augmentation and Semi-Supervised Segmentation for MRI Rectal Cancer Staging}},

booktitle = "Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention Workshops (MICCAIW)",

year = 2022,

}

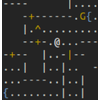

Dealing with unstructured complex patterns provides a challenge to existing

reinforcement patterns. In this research, we propose a new model to overcome the

difficulty in challenging danmaku games. Touhou Project is one of the best-known

games in the bullet hell genre also known as danmaku, where a player has to dodge

complex patterns of bullets on the screen. Furthermore, the agent needs to react to

the environment in real-time, which made existing methods having difficulties

processing the high-volume data of objects; bullets, enemies, etc. We introduce an

environment for the Touhou Project game 東方花映塚 ~ Phantasmagoria of Flower View which

manipulates the memory of the running game and enables to control the character.

However, the game state information consists of unstructured and unordered data not

amenable for training existing reinforcement learning models, as they are not

invariant to order changes in the input. To overcome this issue, we propose a new

pooling-based reinforcement learning approach that is able to handle permutation

invariant inputs by extracting abstract values and merging them in an

order-independent way. Experimental results corroborate the effectiveness of our

approach which shows significantly increased scores compared to existing baseline

approaches.

@InProceedings{ItoiCOG2022,

author = {Takuto Itoi and Edgar Simo-Serra},

title = {{PIFE: Permutation Invariant Feature Extractor for Danmaku Games}},

booktitle = "Proceedings of the Conference on Games (CoG, Short Oral)",

year = 2022,

}

Beyond a Simple Denoiser: Multi-Domain Adversarial Transformer for

Low-Light Photograph Enhancement

Siyuan Zhu, Edgar Simo-Serra

第22回画像の認識・理解シンポジウム(MIRU), 2022

モバイルWebページの構造的な色付け

菊池 康太郎, 井上 直人, 大谷 まゆ, シモセラエド ガー, 山口 光太

第22回画像の認識・理解シンポジウム(MIRU), 2022

Towards Universal Multi-Modal Layout Models

Naoto Inoue, Kotaro Kikuchi, Edgar Simo-Serra, Mayu Otani, Kota Yamaguchi

第22回画像の認識・理解シンポジウム(MIRU、ロングオーラル), 2022

2021

It is common in graphic design humans visually arrange various elements according

to their design intent and semantics. For example, a title text almost always

appears on top of other elements in a document. In this work, we generate graphic

layouts that can flexibly incorporate such design semantics, either specified

implicitly or explicitly by a user. We optimize using the latent space of an

off-the-shelf layout generation model, allowing our approach to be complementary to

and used with existing layout generation models. Our approach builds on a

generative layout model based on a Transformer architecture, and formulates the

layout generation as a constrained optimization problem where design constraints

are used for element alignment, overlap avoidance, or any other user-specified

relationship. We show in the experiments that our approach is capable of generating

realistic layouts in both constrained and unconstrained generation tasks with a

single model.

@InProceedings{KikuchiMM2021,

author = {Kotaro Kikuchi and Edgar Simo-Serra and Mayu Otani and Kota Yamaguchi},

title = {{Constrained Graphic Layout Generation via Latent Optimization}},

booktitle = "Proceedings of the ACM International Conference on Multimedia (MM)",

year = 2021,

}

Web pages have become fundamental in conveying information for companies and

individuals, yet designing web page layouts remains a challenging task for

inexperienced individuals despite web builders and templates. Visual containment,

in which elements are grouped together and placed inside container elements, is an

efficient design strategy for organizing elements in a limited display, and is

widely implemented in most web page designs. Yet, visual containment has not been

explicitly addressed in the research on generating layouts from scratch, which may

be due to the lack of hierarchical structure. In this work, we represent such

visual containment as a layout tree, and formulate the layout design task as a

hierarchical optimization problem. We first estimate the layout tree from a given a

set of elements, which is then used to compute tree-aware energies corresponding to

various desirable design properties such as alignment or spacing. Using an

optimization approach also allows our method to naturally incorporate user

intentions and create an interactive web design application. We obtain a dataset of

diverse and popular real-world web designs to optimize and evaluate various aspects

of our method. Experimental results show that our method generates better quality

layouts compared to the baseline method.

@Article{KikuchiPG2021,

author = {Kotaro Kikuchi and Edgar Simo-Serra and Mayu Otani and Kota Yamaguchi},

title = {{Modeling Visual Containment for Web Page Layout Optimization}},

journal = {Computer Graphics Forum (Pacific Graphics)},

year = 2021,

}

Exploring Latent Space for Constrained Layout Generation

Kotaro Kikuchi, Edgar Simo-Serra, Mayu Otani, Kota Yamaguchi

Visual Computing (ロングオーラル), 2021

Hierarchical Layout Optimization with Containment-aware

Parameterization

Kotaro Kikuchi, Edgar Simo-Serra, Mayu Otani, Kota Yamaguchi

Visual Computing (ロングオーラル), 2021

Item Management Using Attention Mechanism and Meta Actions in Roguelike

Games

Keisuki Izumiya, Edgar Simo-Serra

Visual Computing (ロングオーラル), 2021

Vector line art plays an important role in graphic design, however, it is tedious

to manually create. We introduce a general framework to produce line drawings from

a wide variety of images, by learning a mapping from raster image space to vector

image space. Our approach is based on a recurrent neural network that draws the

lines one by one. A differentiable rasterization module allows for training with

only supervised raster data. We use a dynamic window around a virtual pen while

drawing lines, implemented with a proposed aligned cropping and differentiable

pasting modules. Furthermore, we develop a stroke regularization loss that

encourages the model to use fewer and longer strokes to simplify the resulting

vector image. Ablation studies and comparisons with existing methods corroborate

the efficiency of our approach which is able to generate visually better results in

less computation time, while generalizing better to a diversity of images and

applications.

@Article{HaoranSIGGRAPH2021,

author = {Haoran Mo and Edgar Simo-Serra and Chengying Gao and Changqing Zou and Ruomei Wang},

title = {{General Virtual Sketching Framework for Vector Line Art}},

journal = "ACM Transactions on Graphics (SIGGRAPH)",

year = 2021,

volume = 40,

number = 4,

}

Masked Transformers for Esports Video Captioning

Tsunehiko Tanaka, Edgar Simo-Serra

画像の認識・理解シンポジウム(MIRU), 2021

High-quality Multi-domain Artwork Generation from Semantic

Layouts

Yuantian Huang, Satoshi Iizuka, Edgar Simo-Serra, Kazuhiro Fukui

画像の認識・理解シンポジウム(MIRU、ショートオーラル), 2021

Self-Supervised Fisheye Image Rectification by Reconstructing Coordinate

Relations

Masaki Hosono, Edgar Simo-Serra, Tomonari Sonoda

画像の認識・理解シンポジウム(MIRU), 2021

With the ascent of wearable camera, dashcam, and autonomous vehicle technology,

fisheye lens cameras are becoming more widespread. Unlike regular cameras, the

videos and images taken with fisheye lens suffer from significant lens distortion,

thus having detrimental effects on image processing algorithms. When the camera

parameters are known, it is straight-forward to correct the distortion, however,

without known camera parameters, distortion correction becomes a non-trivial task.

While learning-based approaches exist, they rely on complex datasets and have

limited generalization. In this work, we propose a CNN-based approach that can be

trained with readily available data. We exploit the fact that relationships between

pixel coordinates remain stable after homogeneous distortions to design an

efficient rectification model. Experiments performed on the cityscapes dataset show

the effectiveness of our approach.

@InProceedings{HosonoMVA2021,

author = {Masaki Hosono and Edgar Simo-Serra and Tomonari Sonoda},

title = {{Unsupervised Deep Fisheye Image Rectification Approach using Coordinate Relations}},

booktitle = "International Conference on Machine Vision Applications (MVA)",

year = 2021,

}

Roguelike games are a challenging environment for Reinforcement Learning (RL)

algorithms due to having to restart the game from the beginning when losing,

randomized procedural generation, and proper use of in-game items being essential

to success. While recent research has proposed roguelike environments for RL

algorithms and proposed models to handle this challenging task, to the best of our

knowledge, none have dealt with the elephant in the room, i.e., handling of items.

Items play a fundamental role in roguelikes and are acquired during gameplay.

However, being an unordered set with a non-fixed amount of elements which form part

of the action space, it is not straightforward to incorporate them into an RL

framework. In this work, we tackle the issue of having unordered sets be part of

the action space and propose an attention-based mechanism that can select and deal

with item-based actions. We also propose a model that can handle complex actions

and items through a meta action framework and evaluate them on the challenging game

of NetHack. Experimental results show that our approach is able to significantly

outperform existing approaches.

@InProceedings{IzumiyaCOG2021,

author = {Keisuke Izumiya and Edgar Simo-Serra},

title = {{Inventory Managament with Attention-Based Meta Actions}},

booktitle = "Proceedings of the Conference on Games (CoG)",

year = 2021,

}

Flat filling is a critical step in digital artistic content creation with the

objective of filling line arts with flat colours. We present a deep learning

framework for user-guided line art flat filling that can compute the "influence

areas" of the user colour scribbles, i.e., the areas where the user scribbles

should propagate and influence. This framework explicitly controls such scribble

influence areas for artists to manipulate the colours of image details and avoid

colour leakage/contamination between scribbles, and simultaneously, leverages

data-driven colour generation to facilitate content creation. This framework is

based on a Split Filling Mechanism (SFM), which first splits the user scribbles

into individual groups and then independently processes the colours and influence

areas of each group with a Convolutional Neural Network (CNN). Learned from more

than a million illustrations, the framework can estimate the scribble influence

areas in a content-aware manner, and can smartly generate visually pleasing colours

to assist the daily works of artists. We show that our proposed framework is easy

to use, allowing even amateurs to obtain professional-quality results on a wide

variety of line arts.

@InProceedings{ZhangCVPR2021,

author = {Lvmin Zhang and Chengze Li and Edgar Simo-Serra and Yi Ji and Tien-Tsin Wong and Chunping Liu},

title = {{User-Guided Line Art Flat Filling with Split Filling Mechanism}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR)",

year = 2021,

}

Line art plays a fundamental role in illustration and design, and allows for

iteratively polishing designs. However, as they lack color, they can have issues in

conveying final designs. In this work, we propose an interactive colorization

approach based on a conditional generative adversarial network that takes both the

line art and color hints as inputs to produce a high-quality colorized image. Our

approach is based on a U-net architecture with a multi-discriminator framework. We

propose a Concatenation and Spatial Attention module that is able to generate more

consistent and higher quality of line art colorization from user given hints. We

evaluate on a large-scale illustration dataset and comparison with existing

approaches corroborate the effectiveness of our approach.

@InProceedings{YuanCVPRW2021,

author = {Mingcheng Yuan and Edgar Simo-Serra},

title = {{Line Art Colorization with Concatenated Spatial Attention}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2021,

}

Esports is a fastest-growing new field with a largely online-presence, and is

creating a demand for automatic domain-specific captioning tools. However, at the

current time, there are few approaches that tackle the esports video description

problem. In this work, we propose a large-scale dataset for esports video

description, focusing on the popular game "League of Legends". The dataset, which

we call LoL-V2T, is the largest video description dataset in the vldeo game domain,

and includes 9,723 clips with 62,677 captions. This new dataset presents multiple

new video captioning challenges such as large amounts of domain-specific

vocabulary, subtle motions with large importance, and a temporal gap between most

captions and the events that occurred. In order to tackle the issue of vocabulary,

we propose a masking the domain-specific words and provide additional annotations

for this. In our results, we show that the dataset poses a challenge to existing

video captioning approaches, and the masking can significantly improve performance.

@InProceedings{TanakaCVPRW2021,

author = {Tsunehiko Tanaka and Edgar Simo-Serra},

title = {{LoL-V2T: Large-Scale Esports Video Description Dataset}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2021,

}

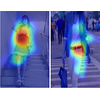

Conditional human image generation, or generation of human images with specified

pose based on one or more reference images, is an inherently ill-defined problem,

as there can be multiple plausible appearance for parts that are occluded in the

reference. Using multiple images can mitigate this problem while boosting the

performance. In this work, we introduce a differentiable vertex and edge renderer

for incorporating the pose information to realize human image generation

conditioned on multiple reference images. The differentiable renderer has

parameters that can be jointly optimized with other parts of the system to obtain

better results by learning more meaningful shape representation of human pose. We

evaluate our method on the Market-1501 and DeepFashion datasets and comparison with

existing approaches validates the effectiveness of our approach.

@InProceedings{HoriuchiCVPRW2021,

author = {Yusuke Horiuchi and Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Differentiable Rendering-based Pose-Conditioned Human Image Generation}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2021,

}

2020

P²Net: A Post-Processing Network for Refining Semantic Segmentation of

LiDAR Point Cloud based on Consistency of Consecutive Frames

Yutaka Momma, Weimin Wang, Edgar Simo-Serra, Satoshi Iizuka, Ryosuke Nakamura,

Hiroshi Ishikawa

IEEE International Conference on Systems, Man, and Cybernetics, 2020

We present a lightweight post-processing method to refine the semantic segmentation

results of point cloud sequences. Most existing methods usually segment frame by

frame and encounter the inherent ambiguity of the problem: based on a measurement

in a single frame, labels are sometimes difficult to predict even for humans. To

remedy this problem, we propose to explicitly train a network to refine these

results predicted by an existing segmentation method. The network, which we call

the P²Net, learns the consistency constraints between "coincident" points from

consecutive frames after registration. We evaluate the proposed post-processing

method both qualitatively and quantitatively on the SemanticKITTI dataset that

consists of real outdoor scenes. The effectiveness of the proposed method is

validated by comparing the results predicted by two representative networks with

and without the refinement by the post-processing network. Specifically,

qualitative visualization validates the key idea that labels of the points that are

difficult to predict can be corrected with P²NNet. Quantitatively, overall mIoU is

improved from 10.5% to 11.7% for PointNet and from 10.8% to 15.9% for PointNet++.

@InProceedings{MommaSMC2020,

author = {Yutaka Momma and Weimin Wang and Edgar Simo-Serra and Satoshi Iizuka and Ryosuke Nakamura and Hiroshi Ishikawa},

title = {{P²Net: A Post-Processing Network for Refining Semantic Segmentation of LiDAR Point Cloud based on Consistency of Consecutive Frames}},

booktitle = "Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC)",

year = 2020,

}

Automatic Segmentation, Localization and Identification of Vertebrae in

3D CT Images Using Cascaded Convolutional Neural Networks

Naoto Masuzawa, Yoshiro Kitamura, Keigo Nakamura, Satoshi Iizuka, Edgar

Simo-Serra

International Conference on Medical Image Computing and Computer

Assisted Intervention (MICCAI), 2020

This paper presents a method for automatic segmentation, localization, and

identification of vertebrae in arbitrary 3D CT images. Many previous works do not

perform the three tasks simultaneously even though requiring a priori knowledge of

which part of the anatomy is visible in the 3D CT images. Our method tackles all

these tasks in a single multi-stage framework without any assumptions. In the first

stage, we train a 3D Fully Convolutional Networks to find the bounding box of the

cervical, thoracic, and lumbar vertebrae. In the second stage, we train an

iterative 3D Fully Convolutional Networks to segment individual vertebrae in the

bounding box. The input to the second network has an auxiliary channel in addition

to the 3D CT images. Given the segmented vertebrae regions in the auxiliary

channel, the network output the next vertebra. The proposed method is evaluated in

terms of segmentation, localization and identification accuracy with two public

datasets of 15 3D CT images from the MICCAI CSI 2014 workshop challenge and 302 3D

CT images with various pathologies. Our method achieved a mean Dice score of 96%, a

mean localization error of 8.3 mm, and a mean identification rate of 84%. In

summary, our method achieved better performance than all existing works in all the

three metrics.

@InProceedings{MasuzawaMICCAI2020,

author = {Naoto Masuzawa and Yoshiro Kitamura and Keigo Nakamura and Satoshi Iizuka and Edgar Simo-Serra},

title = {{Automatic Segmentation, Localization and Identification of Vertebrae in 3D CT Images Using Cascaded Convolutional Neural Networks}},

booktitle = "Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI)",

year = 2020,

}

TopNet: Topology Preserving Metric Learning for Vessel Tree

Reconstruction and Labelling

Deepak Keshwani, Yoshiro Kitamura, Satoshi Ihara, Satoshi Iizuka, Edgar

Simo-Serra

International Conference on Medical Image Computing and Computer

Assisted Intervention (MICCAI), 2020

Reconstructing Portal Vein and Hepatic Vein trees from contrast enhanced abdominal

CT scans is a prerequisite for preoperative liver surgery simulation. Existing deep

learning based methods treat vascular tree reconstruction as a semantic

segmentation problem. However, vessels such as hepatic and portal vein look very

similar locally and need to be traced to their source for robust label assignment.

Therefore, semantic segmentation by looking at local 3D patch results in noisy

misclassifications. To tackle this, we propose a novel multi-task deep learning

architecture for vessel tree reconstruction. The network architecture

simultaneously solves the task of detecting voxels on vascular centerlines (i.e.

nodes) and estimates connectivity between center-voxels (edges) in the tree

structure to be reconstructed. Further, we propose a novel connectivity metric

which considers both inter-class distance and intra-class topological distance

between center-voxel pairs. Vascular trees are reconstructed starting from the

vessel source using the learned connectivity metric using the shortest path tree

algorithm. A thorough evaluation on public IRCAD dataset shows that the proposed

method considerably outperforms existing semantic segmentation based methods. To

the best of our knowledge, this is the first deep learning based approach which

learns multi-label tree structure connectivity from images.

@InProceedings{KeshwaniMICCAI2020,

author = {Deepak Keshwani and Yoshiro Kitamura and Satoshi Ihara and Satoshi Iizuka and Edgar Simo-Serra},

title = {{TopNet: Topology Preserving Metric Learning for Vessel Tree Reconstruction and Labelling}},

booktitle = "Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI)",

year = 2020,

}

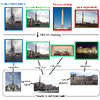

We propose an efficient pipeline for large-scale landmark image retrieval that

addresses the diversity of the dataset through two-stage discriminative re-ranking.

Our approach is based on embedding the images in a feature-space using a

convolutional neural network trained with a cosine softmax loss. Due to the

variance of the images, which include extreme viewpoint changes such as having to

retrieve images of the exterior of a landmark from images of the interior, this is

very challenging for approaches based exclusively on visual similarity. Our

proposed re-ranking approach improves the results in two steps: in the sort-step,

k-nearest neighbor search with soft-voting to sort the retrieved results based on

their label similarity to the query images, and in the insert-step, we add

additional samples from the dataset that were not retrieved by image-similarity.

This approach allows overcoming the low visual diversity in retrieved images.

In-depth experimental results show that the proposed approach significantly

outperforms existing approaches on the challenging Google Landmarks Datasets. Using

our methods, we achieved 1st place in the Google Landmark Retrieval 2019 challenge

on Kaggle.

@InProceedings{YokooCVPRW2020,

author = {Shuhei Yokoo and Kohei Ozaki and Edgar Simo-Serra and Satoshi Iizuka},

title = {{Two-stage Discriminative Re-ranking for Large-scale Landmark Retrieval}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2020,

}

We present an algorithm to generate digital painting lighting effects from a single

image. Our algorithm is based on a key observation: artists use many overlapping

strokes to paint lighting effects, i.e., pixels with dense stroke history tend to

gather more illumination strokes. Based on this observation, we design an algorithm

to both estimate the density of strokes in a digital painting using color geometry,

and then generate novel lighting effects by mimicking artists' coarse-to-fine

workflow. Coarse lighting effects are first generated using a wave transform, and

then retouched according to the stroke density of the original illustrations into

usable lighting effects.

Our algorithm is content-aware, with generated lighting effects naturally adapting to image structures, and can be used as an interactive tool to simplify current labor-intensive workflows for generating lighting effects for digital and matte paintings. In addition, our algorithm can also produce usable lighting effects for photographs or 3D rendered images. We evaluate our approach with both an in-depth qualitative and a quantitative analysis which includes a perceptual user study. Results show that our proposed approach is not only able to produce favorable lighting effects with respect to existing approaches, but also that it is able to significantly reduce the needed interaction time.

Our algorithm is content-aware, with generated lighting effects naturally adapting to image structures, and can be used as an interactive tool to simplify current labor-intensive workflows for generating lighting effects for digital and matte paintings. In addition, our algorithm can also produce usable lighting effects for photographs or 3D rendered images. We evaluate our approach with both an in-depth qualitative and a quantitative analysis which includes a perceptual user study. Results show that our proposed approach is not only able to produce favorable lighting effects with respect to existing approaches, but also that it is able to significantly reduce the needed interaction time.

@Article{ZhangTOG2020,

author = {Lvmin Zhang and Edgar Simo-Serra and Yi Ji and Chunping Liu},

title = {{Generating Digital Painting Lighting Effects via RGB-space Geometry}},

journal = "Transactions on Graphics (Presented at SIGGRAPH)",

year = 2020,

volume = 39,

number = 2,

}

2019

The remastering of vintage film comprises of a diversity of sub-tasks including

super-resolution, noise removal, and contrast enhancement which aim to restore the

deteriorated film medium to its original state. Additionally, due to the technical

limitations of the time, most vintage film is either recorded in black and white,

or has low quality colors, for which colorization becomes necessary. In this work,

we propose a single framework to tackle the entire remastering task

semi-interactively. Our work is based on temporal convolutional neural networks

with attention mechanisms trained on videos with data-driven deterioration

simulation. Our proposed source-reference attention allows the model to handle an

arbitrary number of reference color images to colorize long videos without the need

for segmentation while maintaining temporal consistency. Quantitative analysis

shows that our framework outperforms existing approaches, and that, in contrast to

existing approaches, the performance of our framework increases with longer videos

and more reference color images.

@Article{IizukaSIGGRAPHASIA2019,

author = {Satoshi Iizuka and Edgar Simo-Serra},

title = {{DeepRemaster: Temporal Source-Reference Attention Networks for Comprehensive Video Enhancement}},

journal = "ACM Transactions on Graphics (SIGGRAPH Asia)",

year = 2019,

volume = 38,

number = 6,

}

Spatially placing an object onto a background is an essential operation in graphic

design and facilitates many different applications such as virtual try-on. The

placing operation is formulated as a geometric inference problem for given

foreground and background images, and has been approached by spatial transformer

architecture.In this paper, we propose a simple yet effective regularization

technique to guide the geometric parameters based on user-defined trust regions.

Our approach stabilizes the training process of spatial transformer networks and

achieves a high-quality prediction with single-shot inference. Our proposed method

is independent of initial parameters, and can easily incorporate various priors to

prevent different types of trivial solutions. Empirical evaluation with the

Abstract Scenes and CelebA datasets shows that our approach achieves favorable

results compared to baselines.

@InProceedings{KikuchiICCVW2019,

author = {Kotaro Kikuchi and Kota Yamaguchi and Edgar Simo-Serra and Tetsunori Kobayashi},

title = {{Regularized Adversarial Training for Single-shot Virtual Try-On}},

booktitle = "Proceedings of the International Conference on Computer Vision Workshops (ICCVW)",

year = 2019,

}

ImageNet pre-training has been regarded as essential for training accurate object

detectors for a long time. Recently, it has been shown that object detectors

trained from randomly initialized weights can be on par with those fine-tuned from

ImageNet pre-trained models. However, effect of pre-training and the differences

caused by pre-training are still not fully understood. In this paper, we analyze

the eigenspectrum dynamics of the covariance matrix of each feature map in object

detectors. Based on our analysis on ResNet-50, Faster R-CNN with FPN, and Mask

R-CNN, we show that object detectors trained from ImageNet pre-trained models and

those trained from scratch behave differently from each other even if both object

detectors have similar accuracy. Furthermore, we propose a method for automatically

determining the widths (the numbers of channels) of object detectors based on the

eigenspectrum. We train Faster R-CNN with FPN from randomly initialized weights,

and show that our method can reduce ~27% of the parameters of ResNet-50 without

increasing Multiply-Accumulate operations (MACs) and losing accuracy. Our results

indicate that we should develop more appropriate methods for transferring knowledge

from image classification to object detection (or other tasks).

@InProceedings{ShinyaICCVW2019,

author = {Yosuke Shinya and Edgar Simo-Serra and Taiji Suzuki},

title = {{Understanding the Effects of Pre-training for Object Detectors via Eigenspectrum}},

booktitle = "Proceedings of the International Conference on Computer Vision Workshops (ICCVW)",

year = 2019,

}

Virtual Thin Slice: 3D Conditional GAN-based Super-resolution for CT

Slice Interval

Akira Kudo, Yoshiro Kitamura, Yuanzhong Li, Satoshi Iizuka, Edgar Simo-Serra

International Conference on Medical Image Computing and Computer Assisted

Intervention Workshops (MICCAIW), 2019

Many CT slice images are stored with large slice intervals to reduce storage size

in clinical practice. This leads to low resolution perpendicular to the slice

images (i.e., z-axis), which is insufficient for 3D visualization or image

analysis. In this paper, we present a novel architecture based on conditional

Generative Adversarial Networks (cGANs) with the goal of generating high resolution

images of main body parts including head, chest, abdomen and legs. However, GANs

are known to have a difficulty with generating a diversity of patterns due to a

phenomena known as mode collapse. To overcome the lack of generated pattern

variety, we propose to condition the discriminator on the different body parts.

Furthermore, our generator networks are extended to be three dimensional fully

convolutional neural networks, allowing for the generation of high resolution

images from arbitrary fields of view. In our verification tests, we show that the

proposed method obtains the best scores by PSNR/SSIM metrics and Visual Turing

Test, allowing for accurate reproduction of the principle anatomy in high

resolution. We expect that the proposed method contribute to effective utilization

of the existing vast amounts of thick CT images stored in hospitals.

@InProceedings{KudoMICCAIW2019,

author = {Akira Kudo and Yoshiro Kitamura and Yuanzhong Li and Satoshi Iizuka and Edgar Simo-Serra},

title = {{Virtual Thin Slice: 3D Conditional GAN-based Super-resolution for CT Slice Interval}},

booktitle = "Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention Workshops (MICCAIW)",

year = 2019,

}

固有値分布に基づく物体検出CNNの事前学習効果の分析

進矢 陽介, シモセラ エドガー, 鈴木 大慈

第22回画像の認識・理解シンポジウム(MIRU), 2019

Temporal Distance Matrices for Workout Form Assessment

Ryoji Ogata, Edgar Simo-Serra, Satoshi Iizuka, Hiroshi Ishikawa

第22回画像の認識・理解シンポジウム(MIRU、ショートオーラル), 2019

Regularizing Adversarial Training for Single-shot Object

Placement

Kotaro Kikuchi, Kota Yamaguchi, Edgar Simo-Serra, Tetsunori Kobayashi

第22回画像の認識・理解シンポジウム(MIRU、ショートオーラル), 2019

DeepRemaster: Temporal Source-Reference

Attentionを用いた動画のデジタルリマスター

飯塚 里志,シモセラ エドガー

Visual Computing / グラフィクスとCAD 合同シンポジウム(オーラル) [最優秀研究発表賞], 2019

The preparation of large amounts of high-quality training data has always been the

bottleneck for the performance of supervised learning methods. It is especially

time-consuming for complicated tasks such as photo enhancement. A recent approach

to ease data annotation creates realistic training data automatically with

optimization. In this paper, we improve upon this approach by learning

image-similarity which, in combination with a Covariance Matrix Adaptation

optimization method, allows us to create higher quality training data for enhancing

photos. We evaluate our approach on challenging real world photo-enhancement images

by conducting a perceptual user study, which shows that its performance compares

favorably with existing approaches.

@InProceedings{OmiyaCVPRW2019,

author = {Mayu Omiya and Yusuke Horiuchi and Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Optimization-Based Data Generation for Photo Enhancement}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2019,

}

Temporal Distance Matrices for Squat Classification

Ryoji Ogata, Edgar Simo-Serra, Satoshi Iizuka, Hiroshi Ishikawa

Conference in Computer Vision and Pattern Recognition Workshops (CVPRW),

2019

When working out, it is necessary to perform the same action many times for it to

have effect. If the action, such as squats or bench pressing, is performed with

poor form, it can lead to serious injuries in the long term. For this purpose, we

present an action dataset of squats where different types of poor form have been

annotated with a diversity of users and backgrounds, and propose a model, based on

temporal distance matrices, for the classification task. We first run a 3D pose

detector, then we normalize the pose and compute the distance matrix, in which each

element represents the distance between two joints. This representation is

invariant to differences in individuals, global translation, and global rotation,

allowing for high generalization to real world data. Our classification model

consists of a CNN with 1D convolutions. Results show that our method significantly

outperforms existing approaches for the task.

@InProceedings{OgataCVPRW2019,

author = {Ryoji Ogata and Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Temporal Distance Matrices for Squat Classification}},

booktitle = "Proceedings of the Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)",

year = 2019,

}

We address the problem of conditional image generation of synthesizing a new image

of an individual given a reference image and target pose. We base our approach on

generative adversarial networks and leverage deformable skip connections to deal

with pixel-to-pixel misalignments, self-attention to leverage complementary

features in separate portions of the image, e.g., arms or legs, and spectral

normalization to improve the quality of the synthesized images. We train the

synthesis model with a nearest-neighbour loss in combination with a relativistic

average hinge adversarial loss. We evaluate on the Market-1501 dataset and show how

our proposed approach can surpass existing approaches in conditional image

synthesis performance.

@InProceedings{HoriuchiMVA2019,

author = {Yusuke Horiuchi and Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Spectral Normalization and Relativistic Adversarial Training for Conditional Pose Generation with Self-Attention}},

booktitle = "International Conference on Machine Vision Applications (MVA)",

year = 2019,

}

Re-staining Pathology Images by FCNN

Masayuki Fujitani, Yoshihiko Mochizuki, Satoshi Iizuka, Edgar Simo-Serra,

Hirokazu Kobayashi, Chika Iwamoto, Kenoki Ohuchida, Makoto Hashizume, Hidekata

Hontani, Hiroshi Ishikawa

International Conference on Machine Vision Applications (MVA), 2019

In histopathology, pathologic tissue samples are stained using one of various

techniques according to the desired features to be observed in microscopic

examination. One problem is that staining is irreversible. Once a tissue slice is

stained using a technique, it cannot be re-stained using another. In this work, we

propose a method for simulated re-staining using a Fully Convolutional Neural

Network (FCNN).We convert a digitally scanned pathology image of a sample, stained

using one technique, into another image with a different simulated stain. The

challenge is that the ground truth cannot be obtained: the network needs training

data, which in this case would be pairs of images of a sample stained in two

different techniques. We overcome this problem by using the images of consecutive

slices that are stained using the two distinct techniques, screening for

morphological similarity by comparing their density components in the HSD color

space. We demonstrate the effectiveness of the method in the case of converting

hematoxylin and eosin-stained images into Masson’s trichrome stained images.

@InProceedings{FujitaniMVA2019,

author = {Masayuki Fujitani and Yoshihiko Mochizuki and Satoshi Iizuka and Edgar Simo-Serra and Hirokazu Kobayashi and Chika Iwamoto and Kenoki Ohuchida and Makoto Hashizume and Hidekata Hontani and Hiroshi Ishikawa},

title = {{Re-staining Pathology Images by FCNN}},

booktitle = "International Conference on Machine Vision Applications (MVA, Oral)",

year = 2019,

}

2018

We address the problem of automatic photo enhancement, in which the challenge is to

determine the optimal enhancement for a given photo according to its content. For

this purpose, we train a convolutional neural network to predict the best

enhancement for given picture. While such machine learning techniques have shown

great promise in photo enhancement, there are some limitations. One is the problem

of interpretability, i.e., that it is not easy for the user to discern what has

been done by a machine. In this work, we leverage existing manual photo enhancement

tools as a black-box model, and predict the enhancement parameters of that model.

Because the tools are designed for human use, the resulting parameters can be

interpreted by their users. Another problem is the difficulty of obtaining training

data. We propose generating supervised training data from high-quality professional

images by randomly sampling realistic de-enhancement parameters. We show that this

approach allows automatic enhancement of photographs without the need for large

manually labelled supervised training datasets.

@InProceedings{OmiyaSIGGRAPASIABRIEF2018,

author = {Mayu Omiya and Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Learning Photo Enhancement by Black-Box Model Optimization Data Generation}},

booktitle = "SIGGRAPH Asia 2018 Technical Briefs",

year = 2018,

}

We present an interactive approach for inking, which is the process of turning a

pencil rough sketch into a clean line drawing. The approach, which we call the

Smart Inker, consists of several "smart" tools that intuitively react to user

input, while guided by the input rough sketch, to efficiently and naturally connect

lines, erase shading, and fine-tune the line drawing output. Our approach is

data-driven: the tools are based on fully convolutional networks, which we train to

exploit both the user edits and inaccurate rough sketch to produce accurate line

drawings, allowing high-performance interactive editing in real-time on a variety

of challenging rough sketch images. For the training of the tools, we developed two

key techniques: one is the creation of training data by simulation of vague and

quick user edits; the other is a line normalization based on learning from vector

data. These techniques, in combination with our sketch-specific data augmentation,

allow us to train the tools on heterogeneous data without actual user interaction.

We validate our approach with an in-depth user study, comparing it with

professional illustration software, and show that our approach is able to reduce

inking time by a factor of 1.8x while improving the results of amateur users.

@Article{SimoSerraSIGGRAPH2018,

author = {Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Real-Time Data-Driven Interactive Rough Sketch Inking}},

journal = "ACM Transactions on Graphics (SIGGRAPH)",

year = 2018,

volume = 37,

number = 4,

}

再帰型畳み込みニューラルネットワークによる航空写真の多クラスセグメンテーション

高橋 宏輝,飯塚 里志,シモセラ エドガー,石川 博

第21回画像の認識・理解シンポジウム(MIRU), 2018

背景と反射成分の同時推定による画像の映り込み除去

佐藤 良亮,飯塚 里志,シモセラ エドガー,石川 博

第21回画像の認識・理解シンポジウム(MIRU、オーラル) [学生奨励賞], 2018

補正パラメータ学習による写真の高品質自動補正

近江谷 真由,シモセラ エドガー,飯塚 里志,石川 博

第21回画像の認識・理解シンポジウム(MIRU), 2018

SSDによる郵便物ラベルの認識及び高速化

尾形 亮二,望月 義彦,飯塚 里志,シモセラ エドガー,石川 博

第21回画像の認識・理解シンポジウム(MIRU), 2018

FCNNを用いた病理画像の染色変換

藤谷 真之,望月 義彦,飯塚 里志,シモセラ エドガー,小林 裕和,岩本 千佳,大内田 研宙,橋爪 誠,本谷 秀堅,石川 博

第21回画像の認識・理解シンポジウム(MIRU、オーラル) [学生奨励賞], 2018

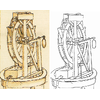

We propose a fully automatic approach to restore aged old line drawings. We

decompose the task into two subtasks: the line extraction subtask, which aims to

extract line fragments and remove the paper texture background, and the restoration

subtask, which fills in possible gaps and deterioration of the lines to produce a

clean line drawing. Our approach is based on a convolutional neural network that

consists of two sub-networks corresponding to the two subtasks. They are trained as

part of a single framework in an end-to-end fashion. We also introduce a new

dataset consisting of manually annotated sketches by Leonardo da Vinci which, in

combination with a synthetic data generation approach, allows training the network

to restore deteriorated line drawings. We evaluate our method on challenging

500-year-old sketches and compare with existing approaches with a user study, in

which it is found that our approach is preferred 72.7% of the time.

@Article{SasakiCGI2018,

author = {Sasaki Kazuma and Satoshi Iizuka and Edgar Simo-Serra and Hiroshi Ishikawa}},

title = {{Learning to Restore Deteriorated Line Drawing}},

journal = "The Visual Computer (Proc. of Computer Graphics International)",

year = {2018},

volume = {34},

pages = {1077--1085},

}

特集「漫画・線画の画像処理」ラフスケッチの自動線画化技術

シモセラ エドガー,飯塚 里志

映像情報メディア学会誌2018年5月号, 2018

We present an integral framework for training sketch simplification networks that

convert challenging rough sketches into clean line drawings. Our approach augments

a simplification network with a discriminator network, training both networks

jointly so that the discriminator network discerns whether a line drawing is a real

training data or the output of the simplification network, which in turn tries to

fool it. This approach has two major advantages. First, because the discriminator

network learns the structure in line drawings, it encourages the output sketches of

the simplification network to be more similar in appearance to the training

sketches. Second, we can also train the simplification network with additional

unsupervised data, using the discriminator network as a substitute teacher. Thus,

by adding only rough sketches without simplified line drawings, or only line

drawings without the original rough sketches, we can improve the quality of the

sketch simplification. We show how our framework can be used to train models that

significantly outperform the state of the art in the sketch simplification task,

despite using the same architecture for inference. We additionally present an

approach to optimize for a single image, which improves accuracy at the cost of

additional computation time. Finally, we show that, using the same framework, it is

possible to train the network to perform the inverse problem, i.e., convert simple

line sketches into pencil drawings, which is not possible using the standard mean

squared error loss. We validate our framework with two user tests, where our

approach is preferred to the state of the art in sketch simplification 92.3% of the

time and obtains 1.2 more points on a scale of 1 to 5.

@Article{SimoSerraTOG2018,

author = {Edgar Simo-Serra and Satoshi Iizuka and Hiroshi Ishikawa},

title = {{Mastering Sketching: Adversarial Augmentation for Structured Prediction}},

journal = "Transactions on Graphics (Presented at SIGGRAPH)",

year = 2018,

volume = 37,

number = 1,

}

2017

Content-aware image resizing aims to reduce the size of an image without touching

important objects and regions. In seam carving, this is done by assessing the

importance of each pixel by an energy function and repeatedly removing a string of

pixels avoiding pixels with high energy. However, there is no single energy

function that is best for all images: the optimal energy function is itself a

function of the image. In this paper, we present a method for predicting the

quality of the results of resizing an image with different energy functions, so as

to select the energy best suited for that particular image. We formulate the

selection as a classification problem; i.e., we 'classify' the input into the class

of images for which one of the energies works best. The standard approach would be

to use a CNN for the classification. However, the existence of a fully connected

layer forces us to resize the input to a fixed size, which obliterates useful

information, especially lower-level features that more closely relate to the

energies used for seam carving. Instead, we extract a feature from internal

convolutional layers, which results in a fixed-length vector regardless of the

input size, making it amenable to classification with a Support Vector Machine.

This formulation of the algorithm selection as a classification problem can be used

whenever there are multiple approaches for a specific image processing task. We

validate our approach with a user study, where our method outperforms recent seam

carving approaches.

@InProceedings{SasakiACPR2017,

author = {Kazuma Sasaki and Yuya Nagahama and Zheng Ze and Satoshi Iizuka and Edgar Simo-Serra and Yoshihiko Mochizuki and Hiroshi Ishikawa},

title = {{Adaptive Energy Selection For Content-Aware Image Resizing}},

booktitle = "Proceedings of the Asian Conference on Pattern Recognition (ACPR)",

year = 2017,

}

画像類似度を考慮したデータセットを用いて学習したCNNによる病理画像の染色変換

藤谷 真之,望月 義彦,飯塚 里志,シモセラ エドガー,石川 博

ヘルスケア・医療情報通信技術研究会(MICT), 2017

In this work, we perform an experimental analysis of the differences of both how